Key takeaways

-

AI search engines respond differently, producing unique answers even to the same query.

-

Minor wording changes can noticeably alter AI-generated responses.

-

Clear formatting helps AI better interpret and display your content.

-

User intent shapes how generative engines organize and present results.

-

Consistent business listings boost visibility across multiple AI search engines.

With so many options for generative engines, favorites are chosen and sides are taken. If you’re an active AI search user or even a beginner, you have your platform you gravitate towards — maybe you like ChatGPT’s thoroughness or Perplexity’s frequent citations.

But that begs the question — Will you have the same experience with your preferred AI search engine that others do with their favorite generative tool?

If it’s anything like my co-worker’s experience, the answer is not really.

When I had the idea to write a blog post focusing on how AI search engines respond to the same search query, she immediately had a story.

When she started to explore different generative AI tools, she took three of them and asked the tools to turn her into a Peanuts character. She fed them the same prompt and asked them to work their magic.

Two of them did. The other one…well…tried.

Needless to say, one of these things is not like the other. One of these things just doesn’t belong.

So, that solidified it — I had to dive in and see how different generative engines produce a response to the same query. And although the insights I uncovered aren’t as funny as the image above, it did reveal some interesting insights about how AI search engines produce responses to queries.

In this guide:

- Understanding Generative Engines

- Background on This Exploration

- How Generative AI Search Engines Produce Responses to the Same Queries

- Overall Insights from This Generative AI Response Exploration

Understanding generative engines

All generative engines have a similar operating structure, but it’s not identical. Generally, they all train on a set of data and use decoding strategies to decipher information to produce responses. They all leverage prompt engineering, which determines what information appears in the response.

But where they differ is how the components within that structure are built. For example, ChatGPT may train on a completely separate data set than Claude. Claude may use different decoding strategies than Gemini. So while they’re similar at the base structure, how that structure is built differs between generative engines.

As a result, you don’t get identical answers from these engines. Which prompts the question: How do responses differ on popular generative engines?

Background on this exploration

To explore the question at hand, I pulled a list of the top generative AI platforms to feed the queries to. I opted for the big ones:

- AI Overviews

- ChatGPT

- Google AI Mode

- Perplexity

- Claude

- Gemini

- Copilot

From there, I decided to take a query-type approach to better represent the types of queries people ask and how the type of query can impact the output from a generative engine.

So, I opted to hit four major query categories:

- Question-based queries: Like the name suggests, these are queries where you ask a simple question to start the conversion (Ex. “What is the best way to build a planter box?”) This excludes questions with a purchase or local intent.

- Research-based: These queries are more in-depth and typically ask more from the generative engine — they’re prompt-based queries that add all details the searcher needs from the AI search engine.

- Shopping-based: These are queries where the intention involves purchasing a product, whether at the moment or in the future (Ex. “I’m looking for a water bottle that keeps water cold for 24 hours. What are the best options?”)

- Location-based: These are queries that include a location tag to indicate a geographical focus. (Ex. “Who is the best HVAC company in Denver?”)

For each query type, I put the same exact question or prompt into each of the generative engines listed above and compared their responses.

Keep in mind that, for each query type, I looked at one prompt per category. So, the insights from this exploration may slightly vary depending on the query topic, but there are general overarching similarities and differences that you can take away regardless of query type.

Additionally, it’s also important to know that the responses will shift depending upon the users and the day. Generative engines aren’t static — they shift who they cite, how they present information, and more. But, having the general insight into how these engines produce queries can help you stay agile as you optimize for AI search engines.

How generative AI search engines produce responses to the same queries

Let’s explore the four different query types to see how the most popular generative engines produce responses to them.

Query type #1: Question-based

For the question-query type, I input the question “How can I save on my energy bill?” into each generative engine.

Here were the responses:

Query: How can I save on my energy bill?

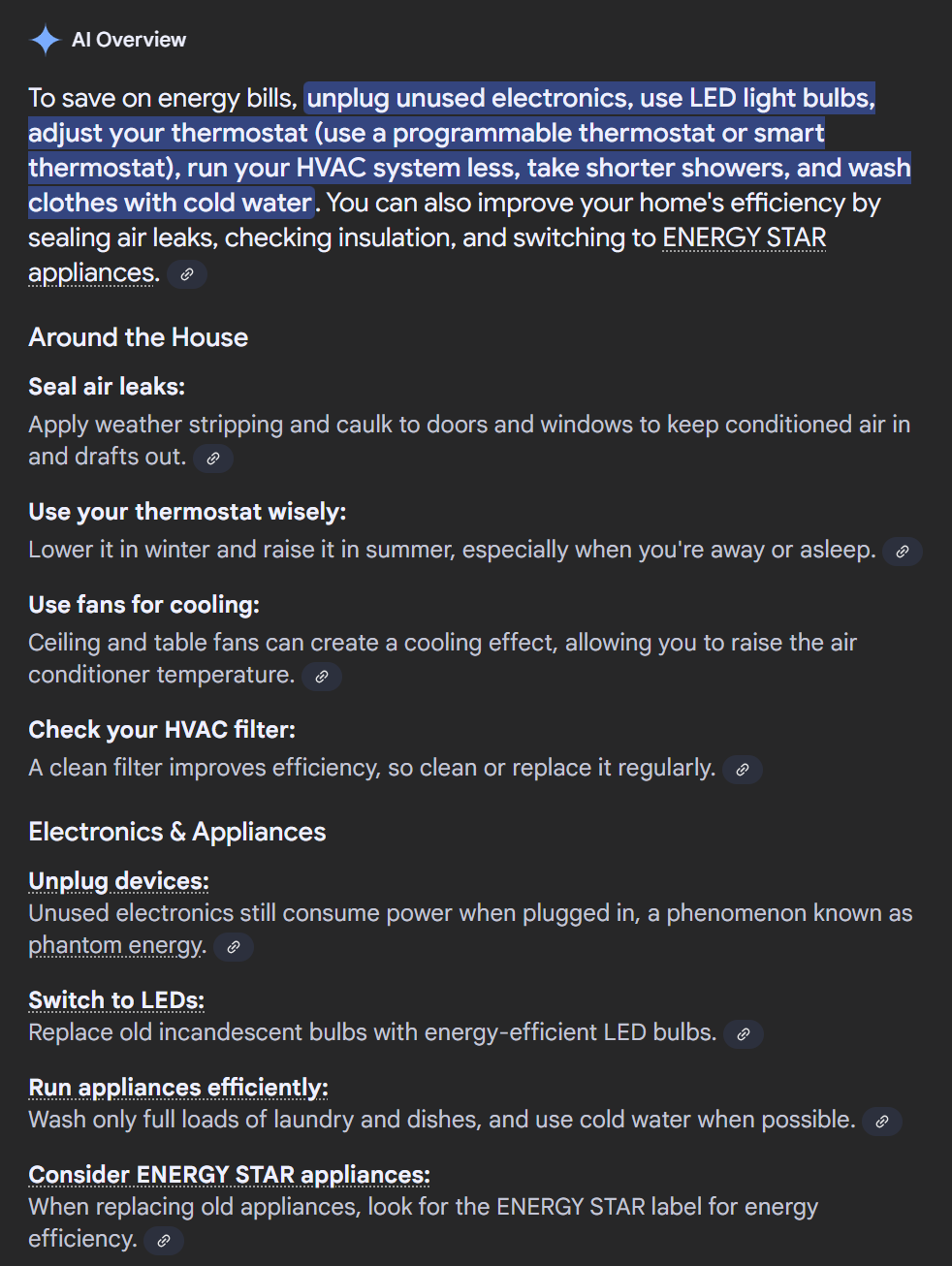

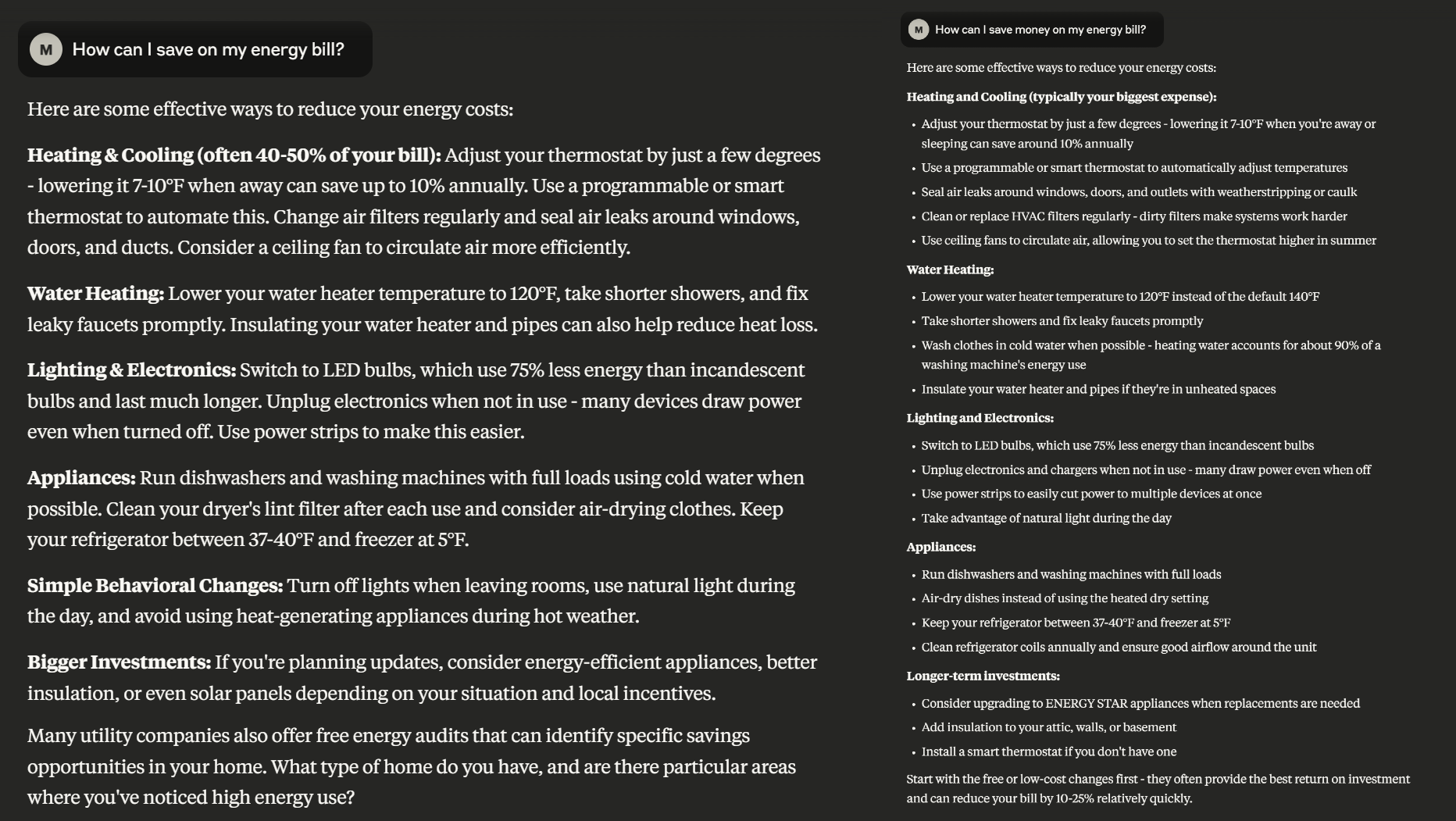

AI Overviews’ response

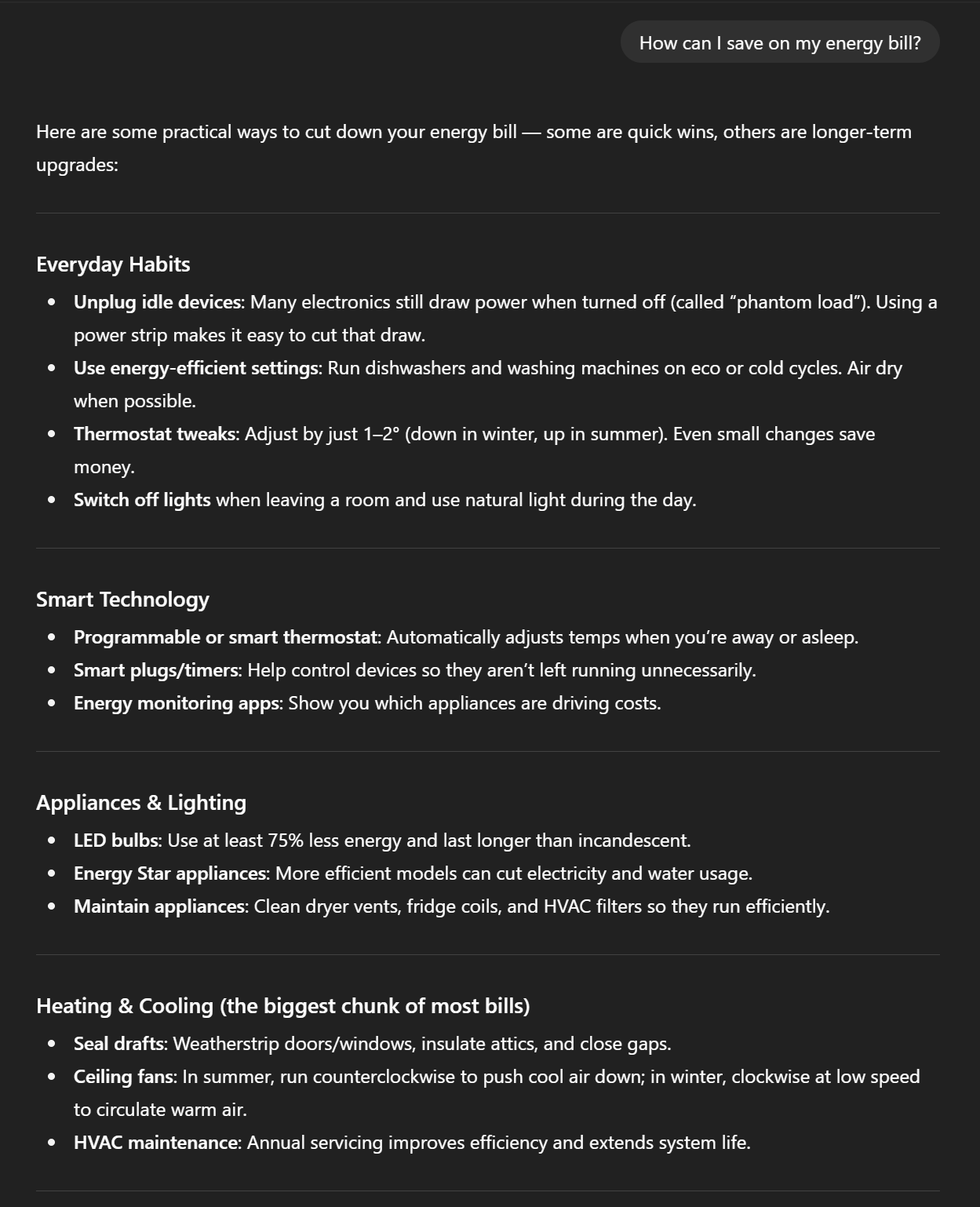

ChatGPT’s response

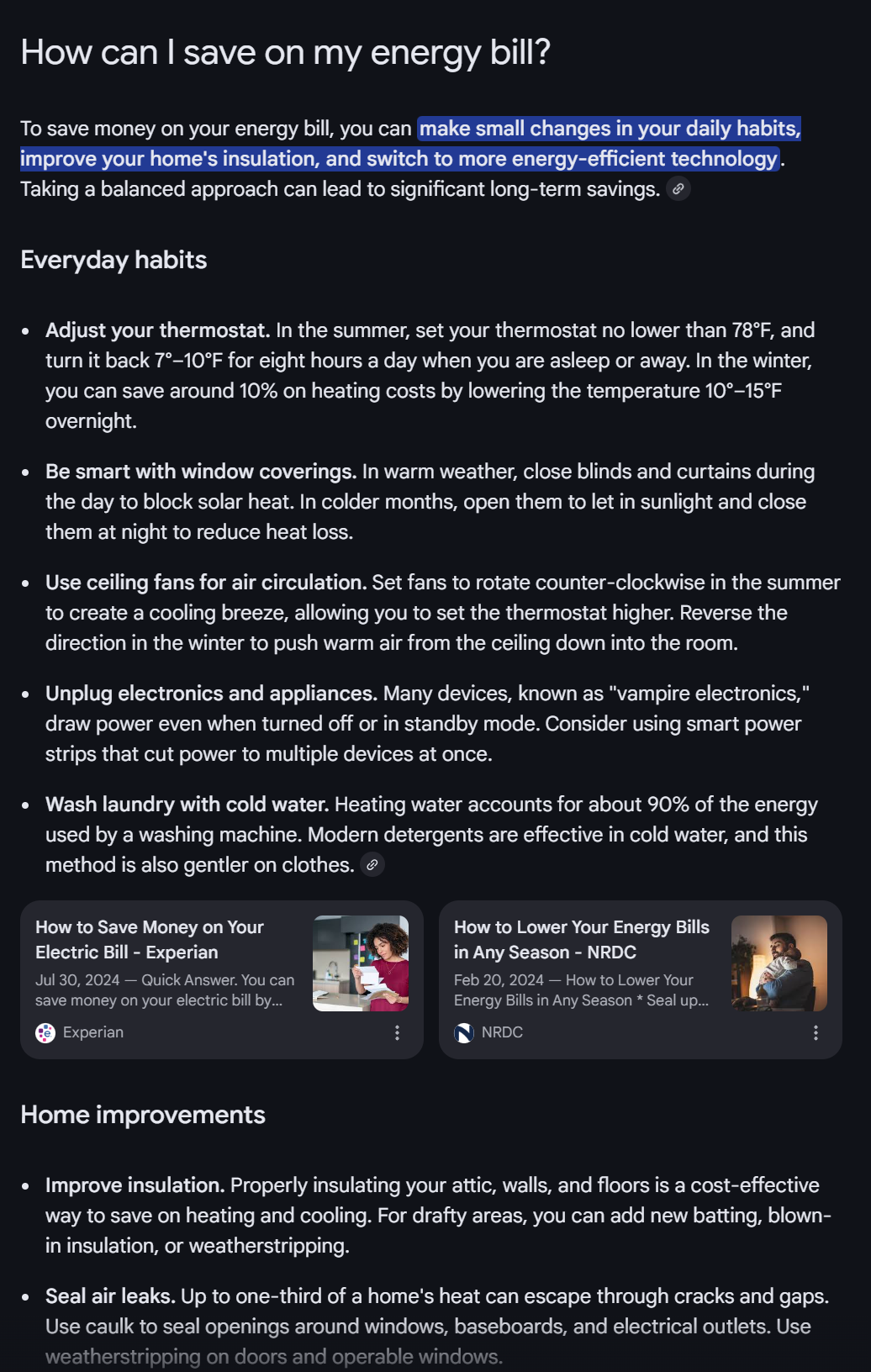

Google AI Mode’s response

Perplexity’s response

Claude’s response

Gemini’s response

Copilot’s response

Here are some key insights from searching this query type:

- Most of the generative engines pulled the same information. They focused on things like heating and cooling, home efficiency upgrades, and appliance usage.

- ChatGPT, AI Mode, Perplexity, and Gemini all produced similar formatted responses. They generally categorized information by areas where you can improve (like the ones listed above).

- The four above platforms also took a user-focused approach by dedicating a section to changing everyday habits or behaviors.

- AI Overviews and Claude gave very short, succinct responses to the query. All the other platforms provided more detail and insights than these two platforms.

The most interesting response from this crowd was Copilot. Despite the query not having outward local intent, it took a localized approach to the response. This query has a somewhat “hidden” local intent, where the tips you might take advantage of depend upon your location and climate.

Not only did Copilot pull my city location into the response, but it also gave me a Pennsylvania-specific resource I could check out for more information on energy efficiency. This was unique from any of the other platforms I tested with this query.

Additional exploration: Claude & query modification

As mentioned prior, Claude gave one of the shortest responses out of all the engines. It was very succinct and to the point — there weren’t even subheadings to organize the tips into categories like all the other engines!

That got me thinking — What would happen if I just ever-so-slightly tweaked the query?

So I did.

Instead of searching, “How can I save on my energy bill?” I searched, “How can I save money on my energy bill?”

Only a one word differential. Would it make a difference?

Turns out, it did. Claude produced a much more in-depth response to my query than the original query.

In the image comparison below, you can see how the length of response is much longer after adding the word “money” to the query. There are also headings present to organize the information.

When I ran this same modified query through Gemini, the information it shared changed, too. It offered information about government programs I could take advantage of that included tax credits, rebates, and other money-saving tips.

So, what does this mean?

It means that even the slightest modifications to a query can change the information in the response, which can, in turn, impact what information you put on your pages.

How you can take action based on this exploration

If you’re targeting question-based queries, here are a few takeaways you can apply to your AI search optimization strategy:

Organize and categorize your page content

The majority of the engine responses verified something marketing experts have been preaching with AI — organize the information on your pages.

Using subheadings to organize information on your page is a multifaceted benefit. It helps AI crawlers better understand what’s on your page, but also helps provide context and makes it easier to understand.

Subheadings help readers, too. It allows people to know what to expect in a section of information and skip ahead to the section they’re looking for.

So, take advantage of subheadings to help organize information, so both readers and generative engines can access your information easily.

Account for keyword modifications

My little side quest to modify the original query showed one big thing — keyword modifications matter. You may think that adding one word doesn’t make a difference, but realistically, it provides additional context for LLMs, which triggers different information to populate.

This is a difference from traditional SEO. With traditional SEO, targeting both “save on my energy bill” and “save money on my energy bill” would produce the same websites sharing the same information, with maybe a few varying sources.

But when it comes to LLMs, the subtle differences allow these platforms to give more granular details, which alters the response.

So, whether you’re updating an old page or creating a new one, you need to account for how your keyword or phrase may be modified. Put your related keywords into an LLM and see how the response changes from the original target keyword/phrase. It’ll help you identify information gaps you can address to have a better chance of appearing in LLM results.

Query type #2: Research-based

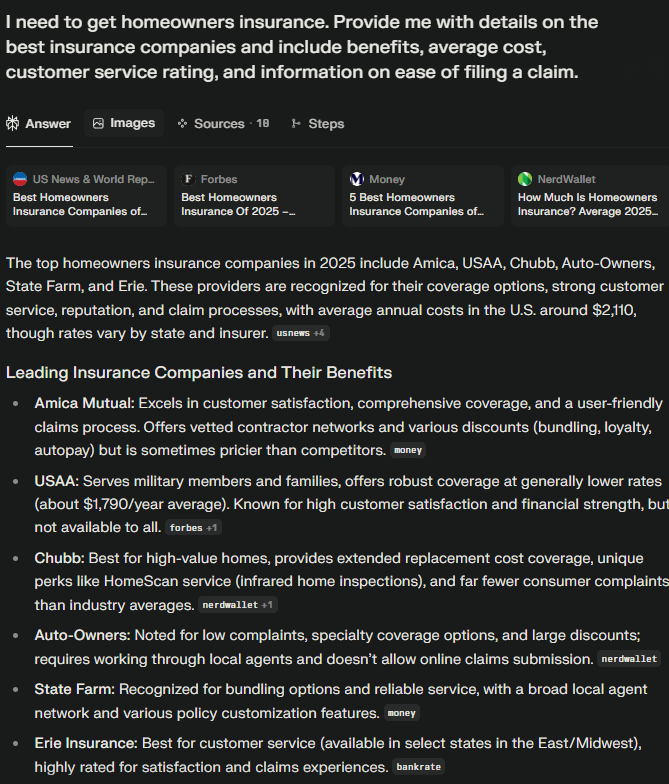

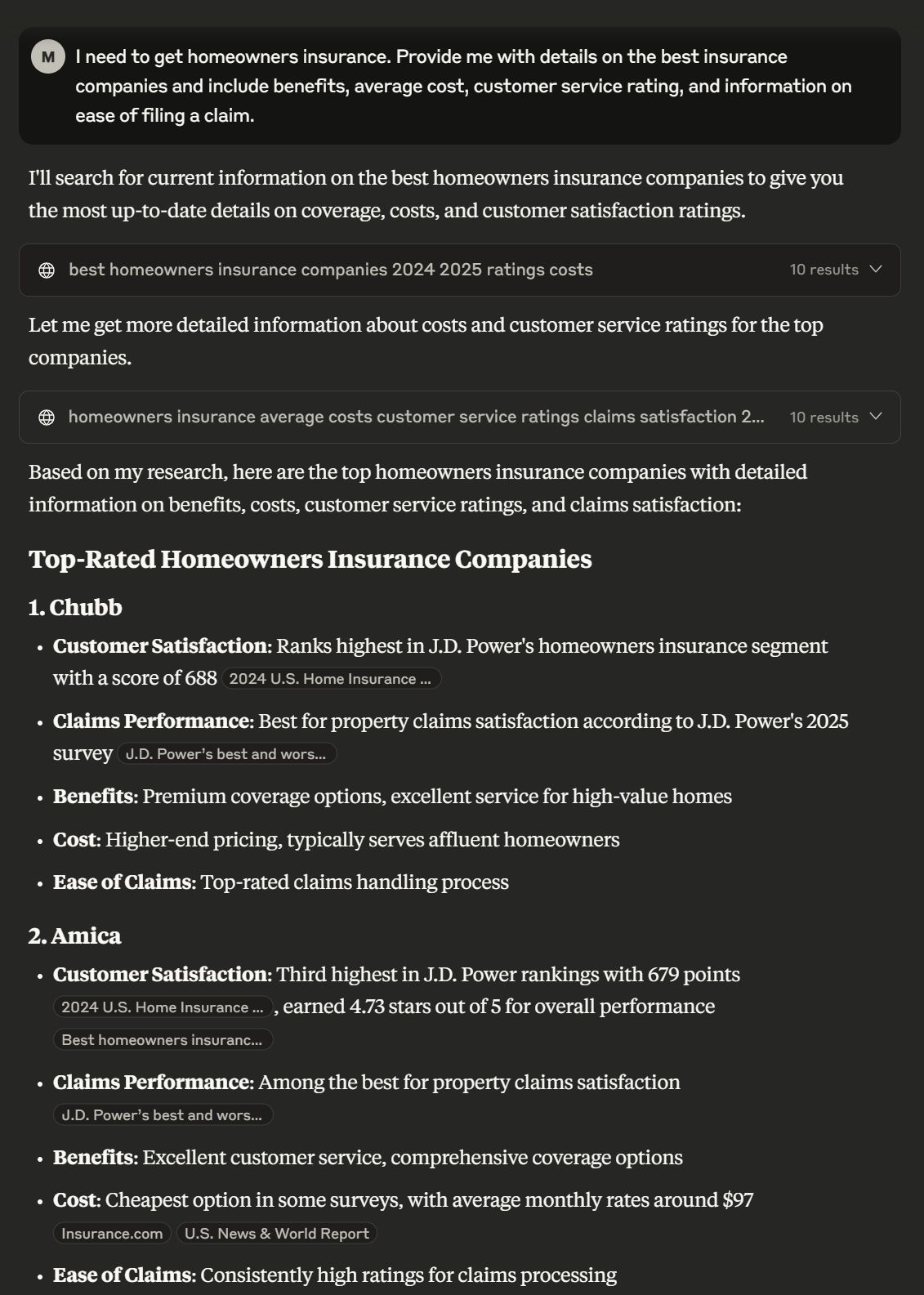

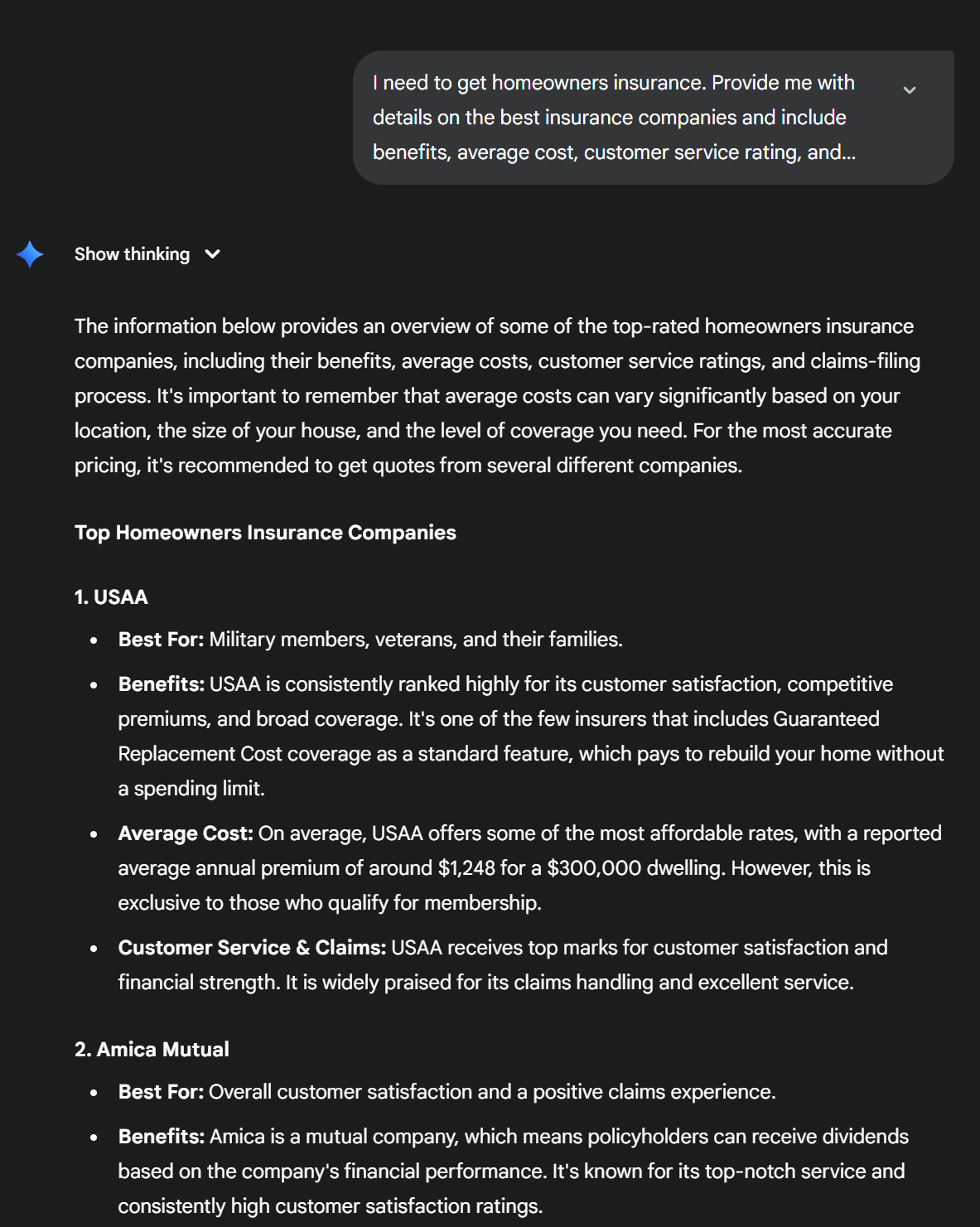

For this query type, I gave all the generative engines a prompt of me looking for homeowners insurance and specified the information I wanted to know.

Here were the responses:

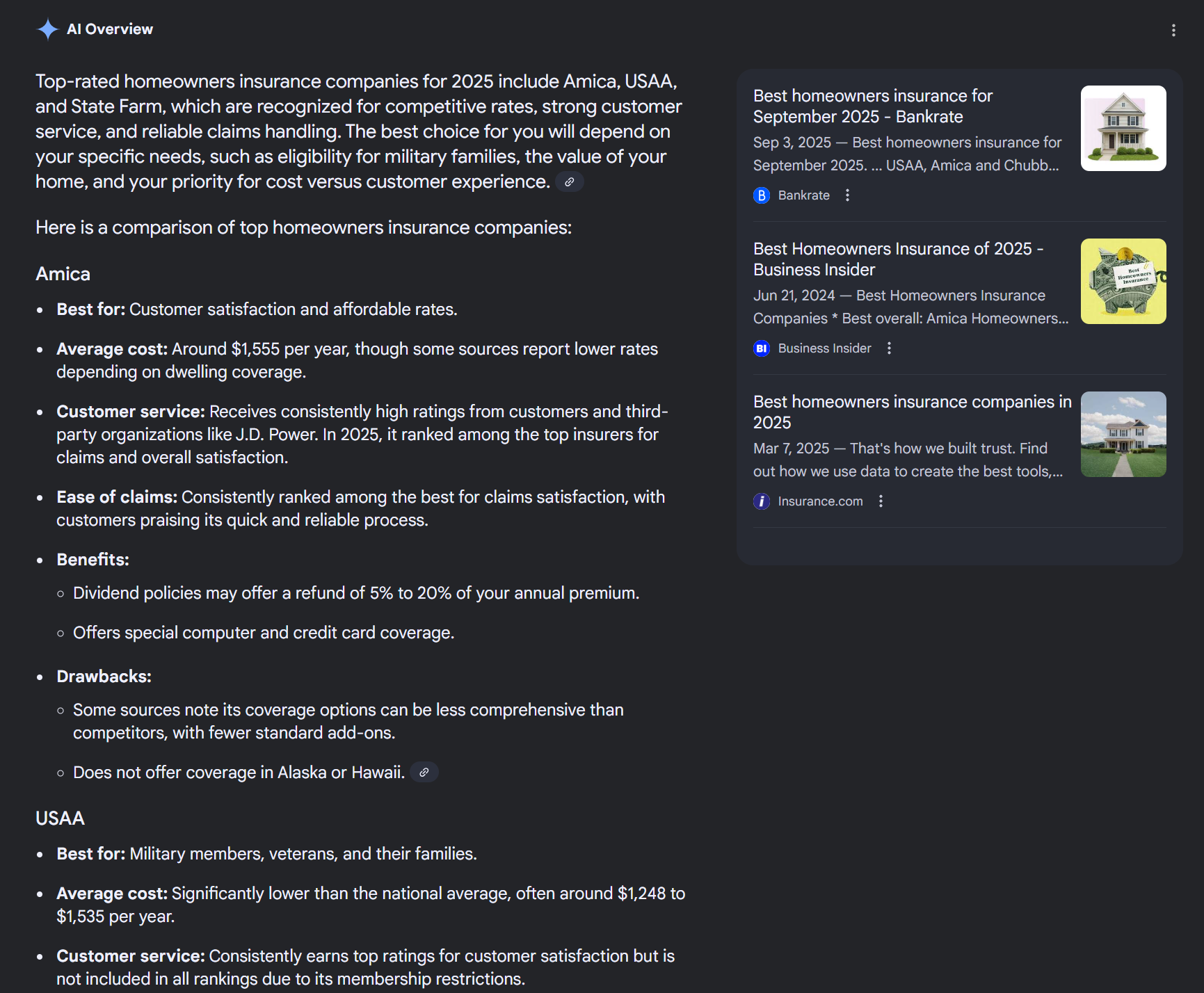

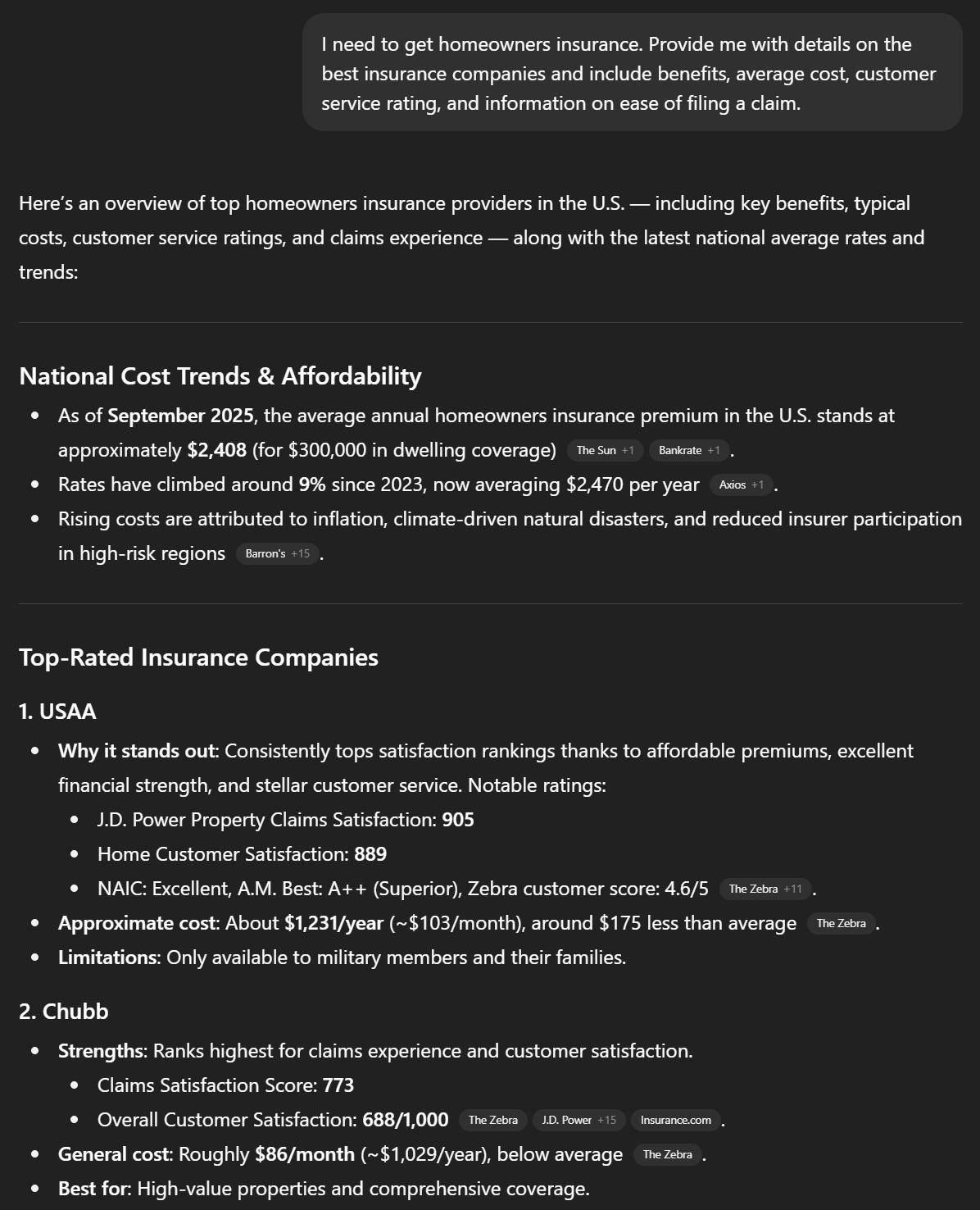

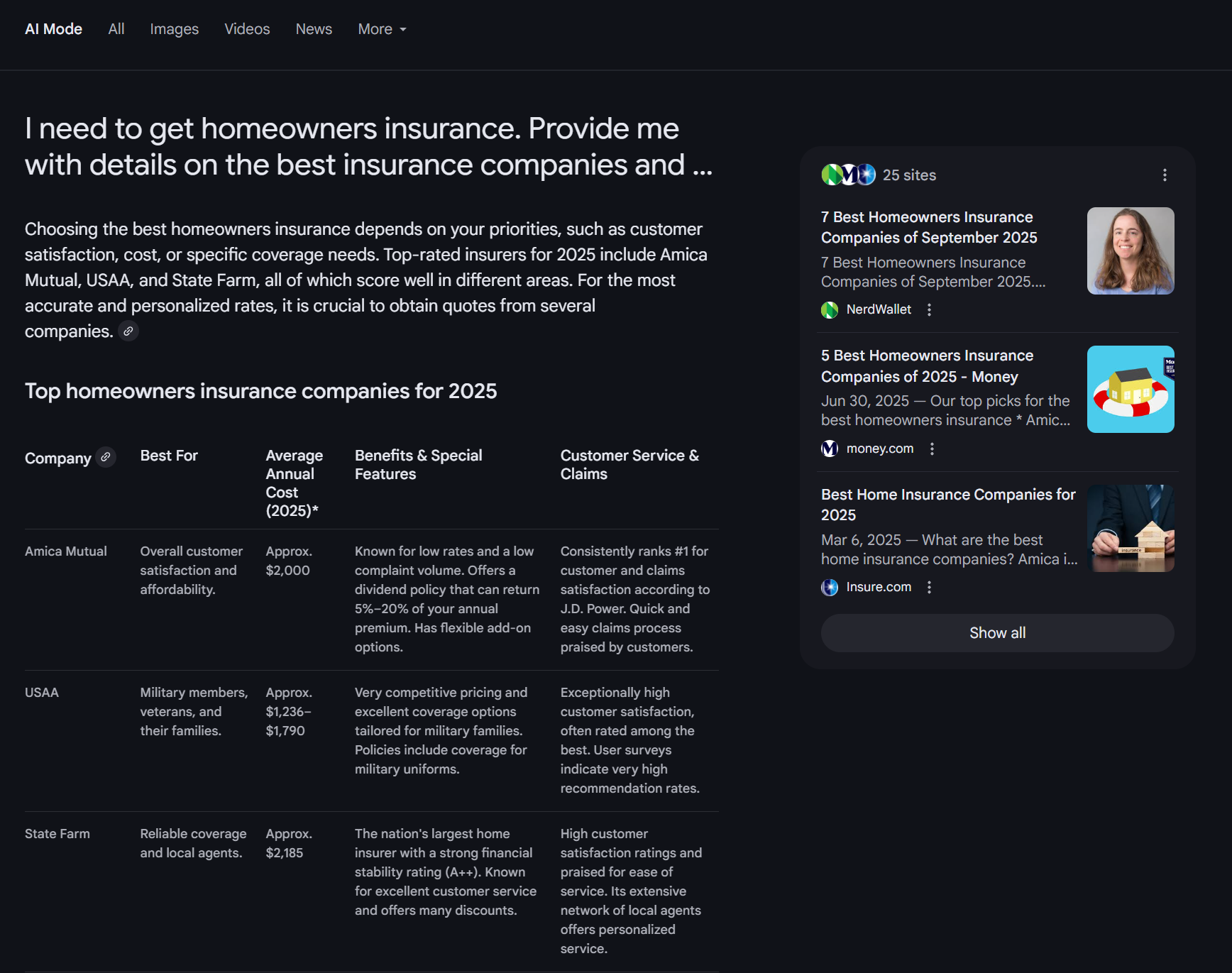

Query: I need to get homeowners insurance. Provide me with details on the best insurance companies and include benefits, average cost, customer service rating, and information on ease of filing a claim.

AI Overviews’ response

ChatGPT’s response

Google AI Mode’s response

Perplexity’s response

Claude’s response

Gemini’s response

Copilot’s response

Here are some key insights from these queries:

- All the engines provided the requested information. Surprisingly, even with loading the wordy prompt into Google, AI Overviews still produced information on all aspects I wanted to know. The big differentiator, though, was the formatting.

- All but Claude, AI Overviews, and Gemini provided the information in a table. With this query specifically, the information I requested was presented in an easy-to-follow table, where it broke down things like cost, customer service feedback, and information on filing claims.

- All but Gemini provided citations for information. One of the most interesting finds from comparing the responses to this query is that all the engines, except Gemini, provided citations to information.

Copilot, once again, delivered a different experience — it gave me a response with a hint of location-based customization. While it listed the same companies as other generative engines, it did include a Pennsylvania-specific insurance company I could use. So, yet again, the information from Copilot was a little more tailored to me.

How you can take action based on this exploration

Based on the exploration of these keywords, here are a few takeaways you can get from this query example.

When in doubt, put the user first

With this exploration, organization was big. The majority of the generative engines presented the information in a table because, ultimately, a user like me is looking to compare to find the company that seems best suited to my needs.

So, instead of reading about each company individually, I can easily see them side-by-side and compare the important aspects I care about (cost, ease of filing a claim, etc.).

That means: When in doubt, put the user first.

Having a page that presents the information in the most logical format is an effective way to help AI bots easily crawl and digest the information, but also provide a positive user experience for anyone who visits your page.

It really means boiling it down to what the user is looking for and what they want from the query. With a query like this one, the indicator is that I would like to compare companies, so AI reflected that.

While inserting something like a table, in this instance, doesn’t mean AI will just pull your entire table, it does make it easier for it to use the information on your page to fill in the blanks (and maybe even get cited!).

Be thorough

If this prompting exploration proves anything, it’s the importance of being thorough. Creating half-rate content with little sustenance won’t satisfy users, no matter what search tool they use. Regardless of how the information was formatted (table or no table), it was thorough and provided what I wanted to know.

That being said, if you want to have a chance at being pulled into the responses from these generative AI tools, you need to be thorough, too. Think about the questions people might ask around a topic and build from there.

So, for example, if someone is looking for the best home insurance companies, what information would they want to know? Likely, they’d want to know things like cost or claim success. Making sure you include that core information in your page makes you more likely to get pulled in AI responses.

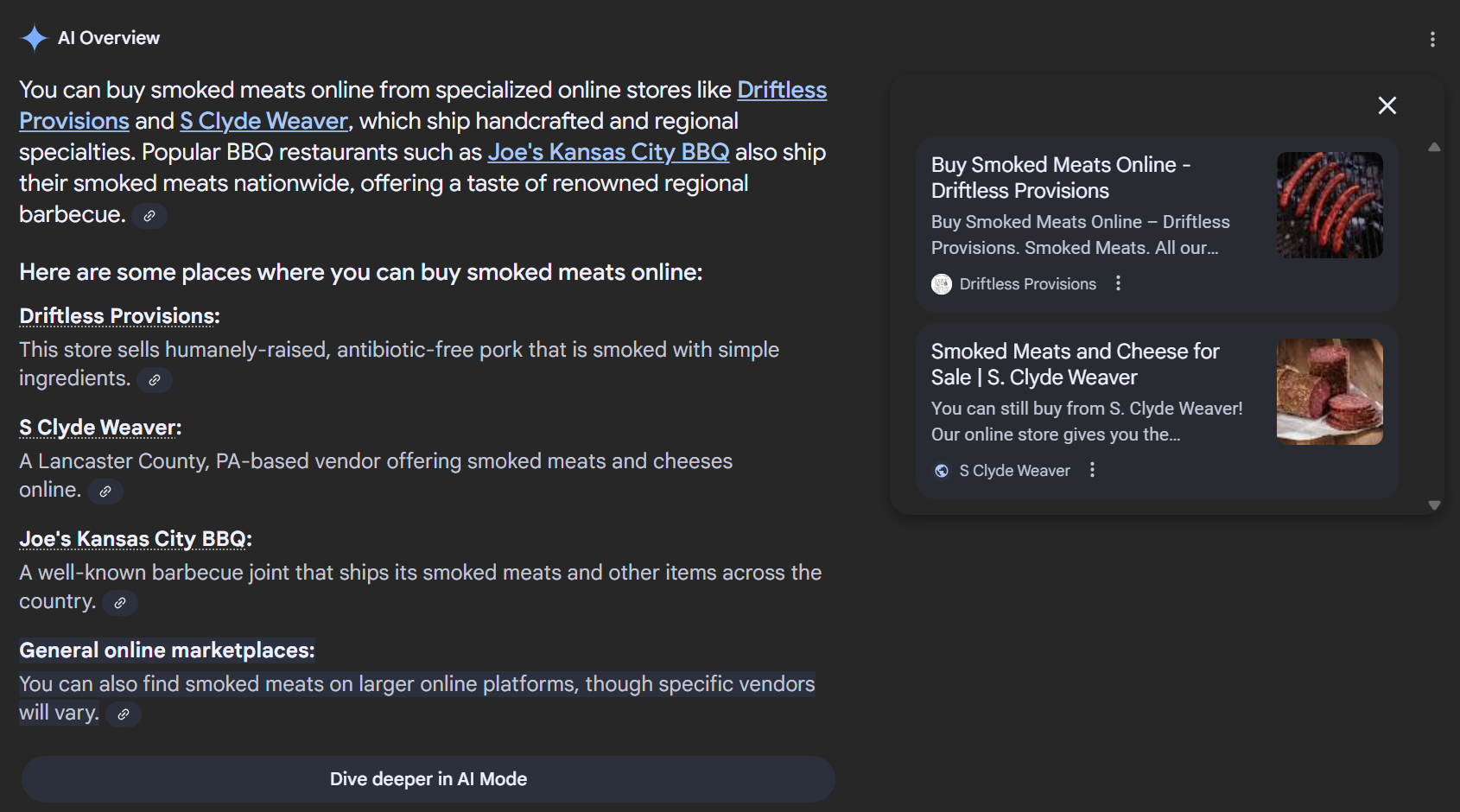

Query type #3: Shopping-based

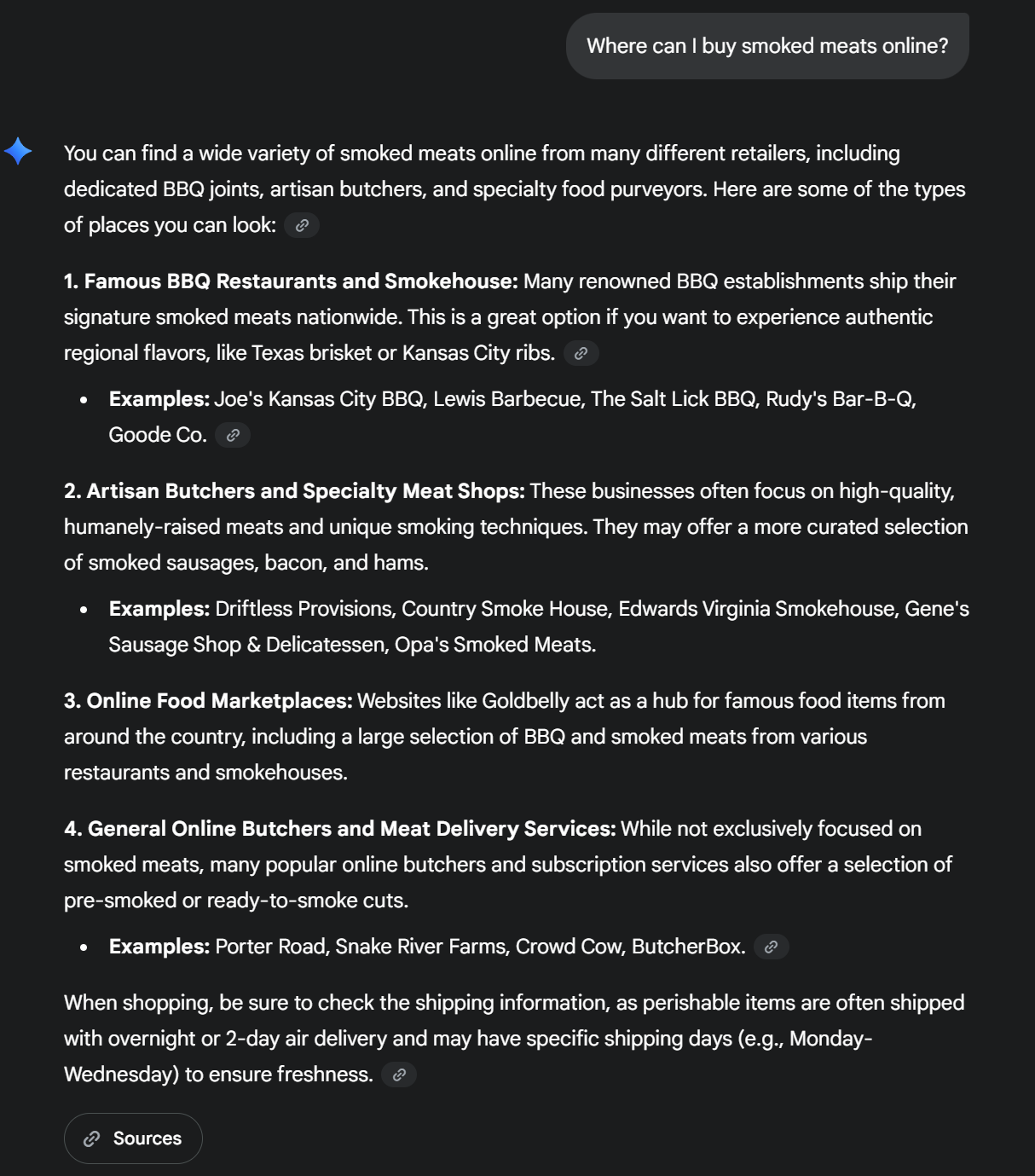

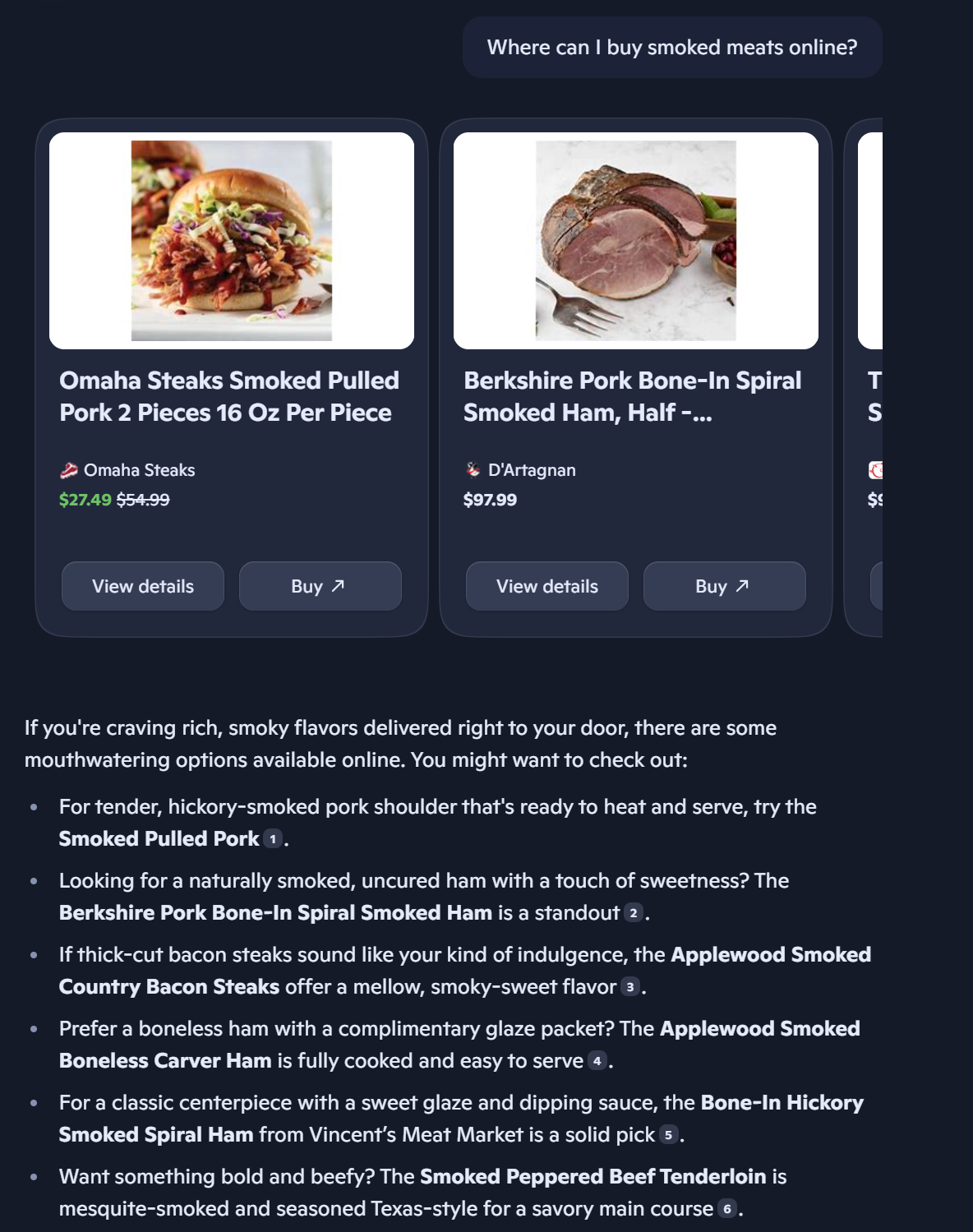

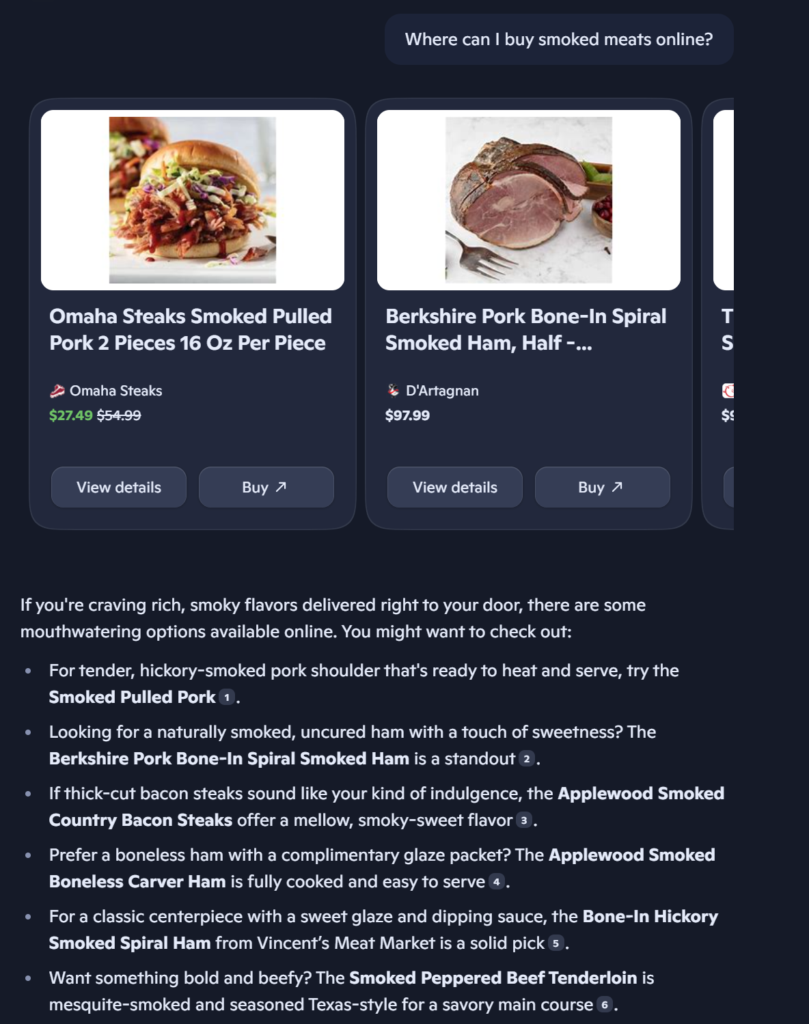

For this query type, I opted for a simple query that people often ask, “where can I buy [item]”. So, I explored this query type with the question “Where can I buy smoked meats online?”

Here were the responses:

Query: Where can I buy smoked meats online?

AI Overviews’ response

ChatGPT’s response

Google AI Mode’s response

Perplexity’s response

Claude’s response

Gemini’s response

Copilot’s response

Here are some key insights from these queries:

-

- Most generative engines structured responses with categories. Even with a more BOFU intent, the majority of generative engines still presented the options in an informational way by categorizing options. It was framed more as a brand discovery search, versus a product-based search.

- AI Overviews, AI Mode, and Perplexity gave a local option. The other generative engines stuck to more nationally known brands and vendors.

- Generative engines were not consistent with their responses. There was some overlap between AI search engines, but not a ton.

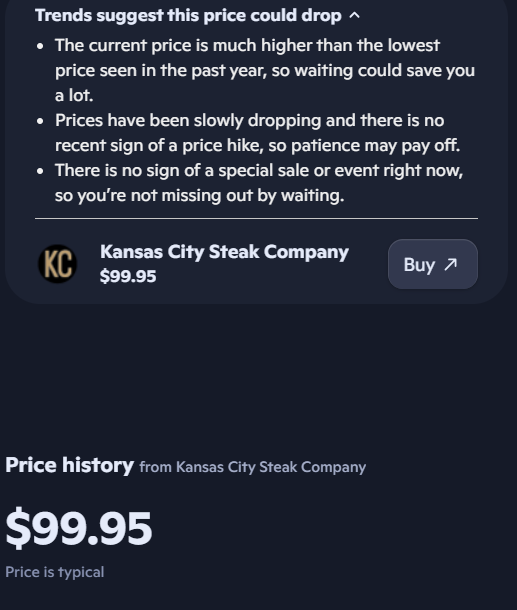

Yet again, Copilot differentiated its response from the pack. Most of the responses categorized the places to buy smoked meats and shared a description of what that place offers. Copilot, instead, shared shoppable listings with specific products, taking a more product-first approach vs. brand-first approach like the other generative engines did.

If you opted to get more information about the product, you could see information like star rating, brand, and weight. Copilot also integrates price history data to help you understand if you’re getting the best deal.

How you can take action based on this exploration

So, if you’re targeting shopping-based queries, what can you take away from this exploration?

Build your branding

For purchase-intent searches like this one, building your branding is crucial. You need to build a strong association between your brand and what you offer (products or services). So, that way, your brand shows up in the mix of results.

Build up keyword associations with your brand. So, like in this example, a place that sells smoked meats would target keywords related to that product to help build brand association between their company and their product.

Enhance your product or service descriptions

With bottom of the funnel searches, providing a thorough description of your product that can provide context for generative engines is a must.

In the example query, many of the generative engines categorized the options — they’re plugged into categories like “national retailers,” or “artisan options.” By integrating descriptors like this into your product listings, you can help provide context for AI, but also let it know where to slot your business in results.

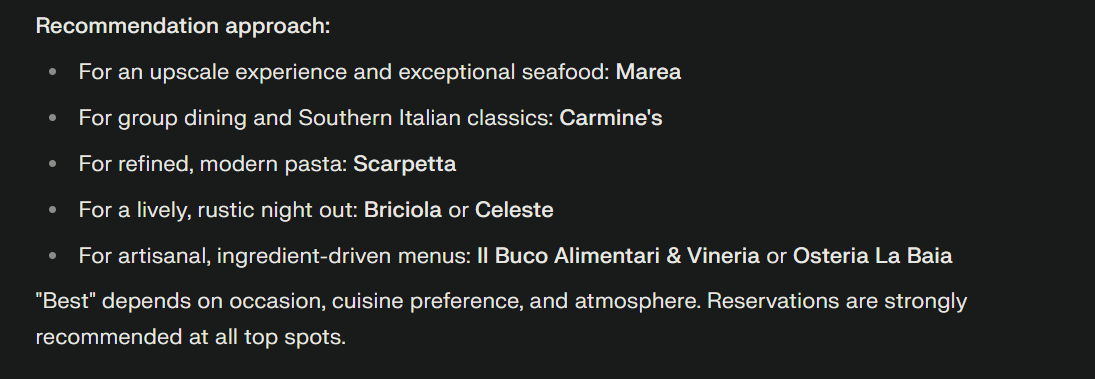

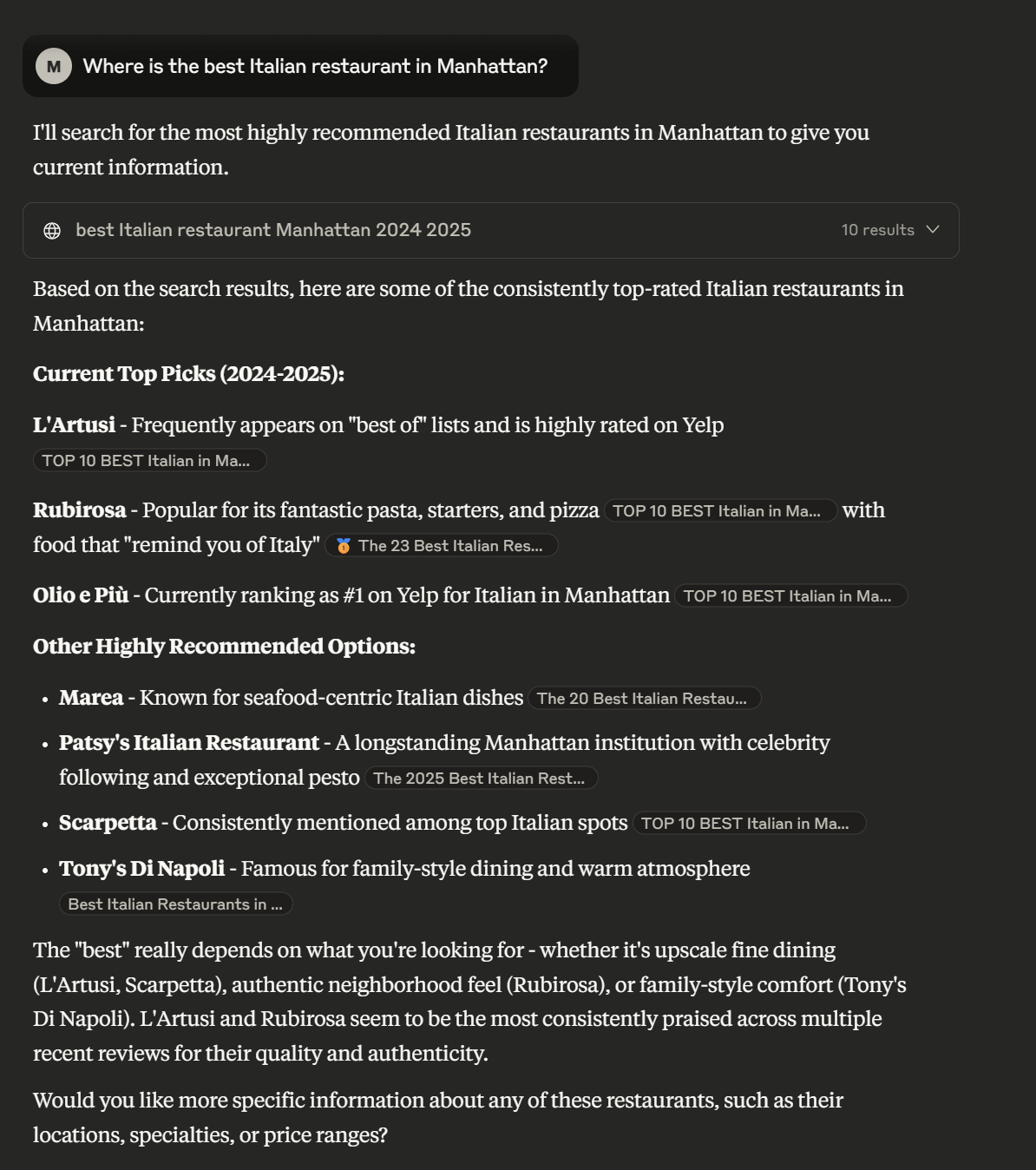

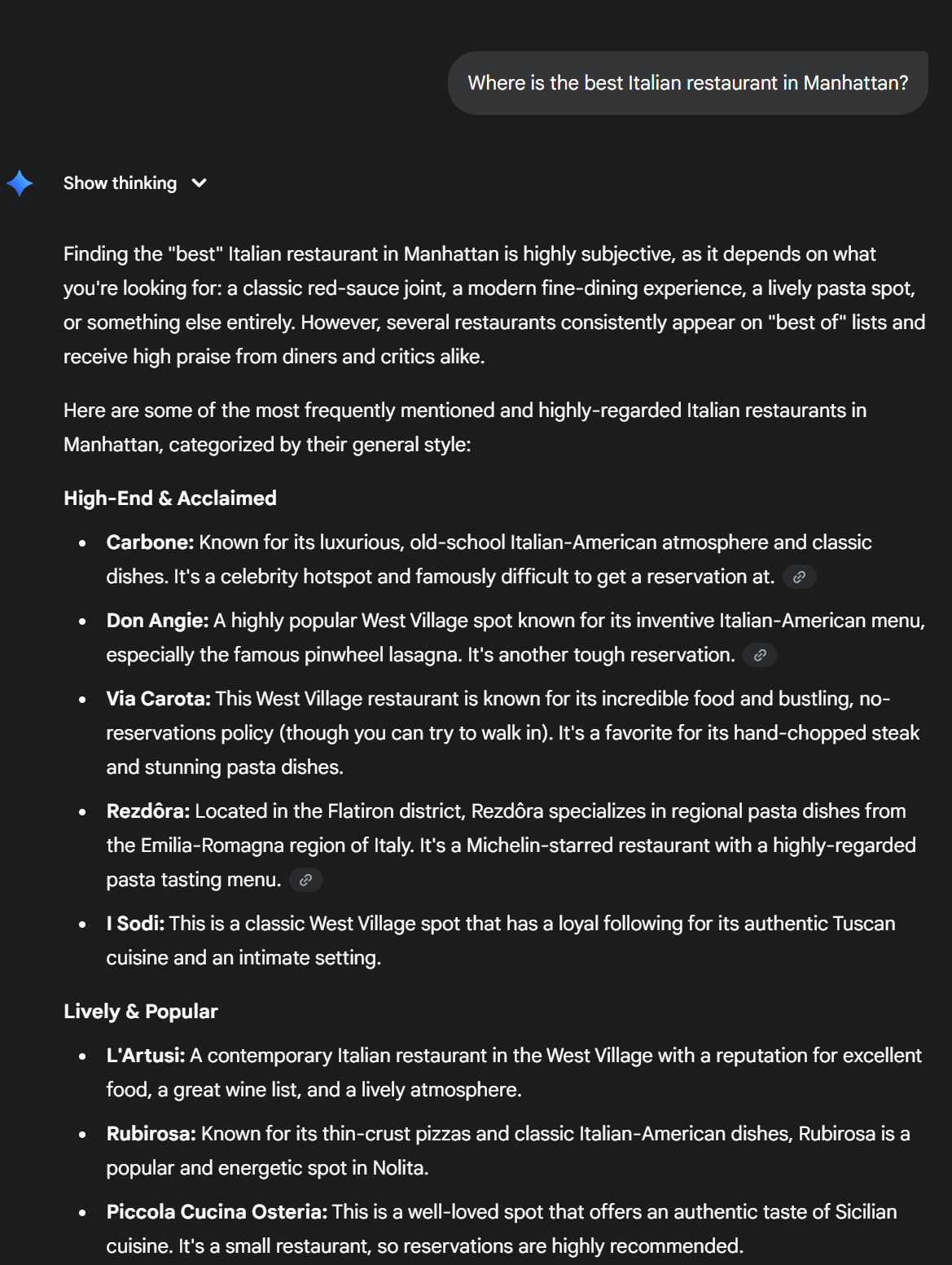

Query type #4: Location-based

The last type of query we’ll look at is the location-based query. For this local query, I used the question “Where is the best Italian restaurant in Manhattan?”

Here were the responses:

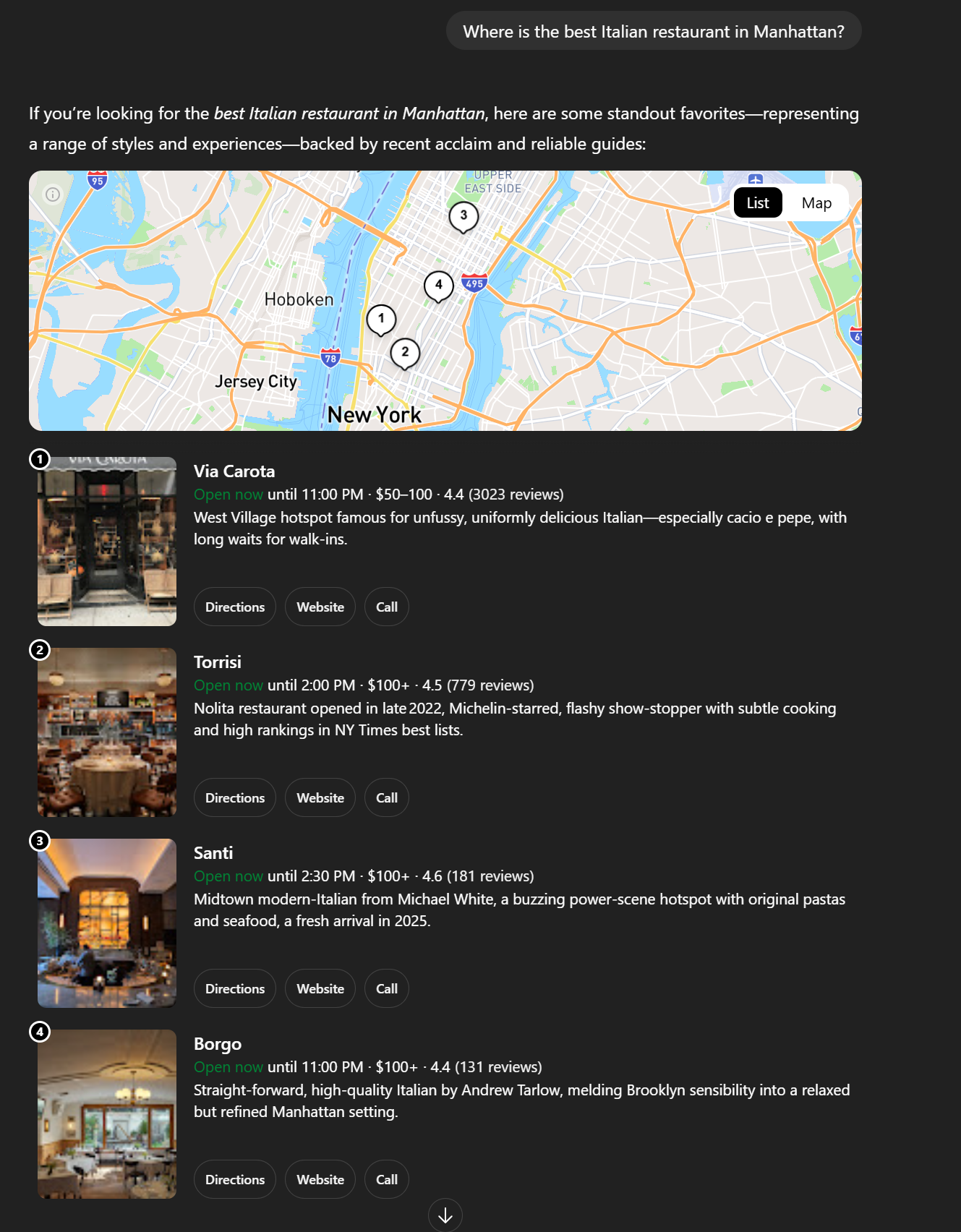

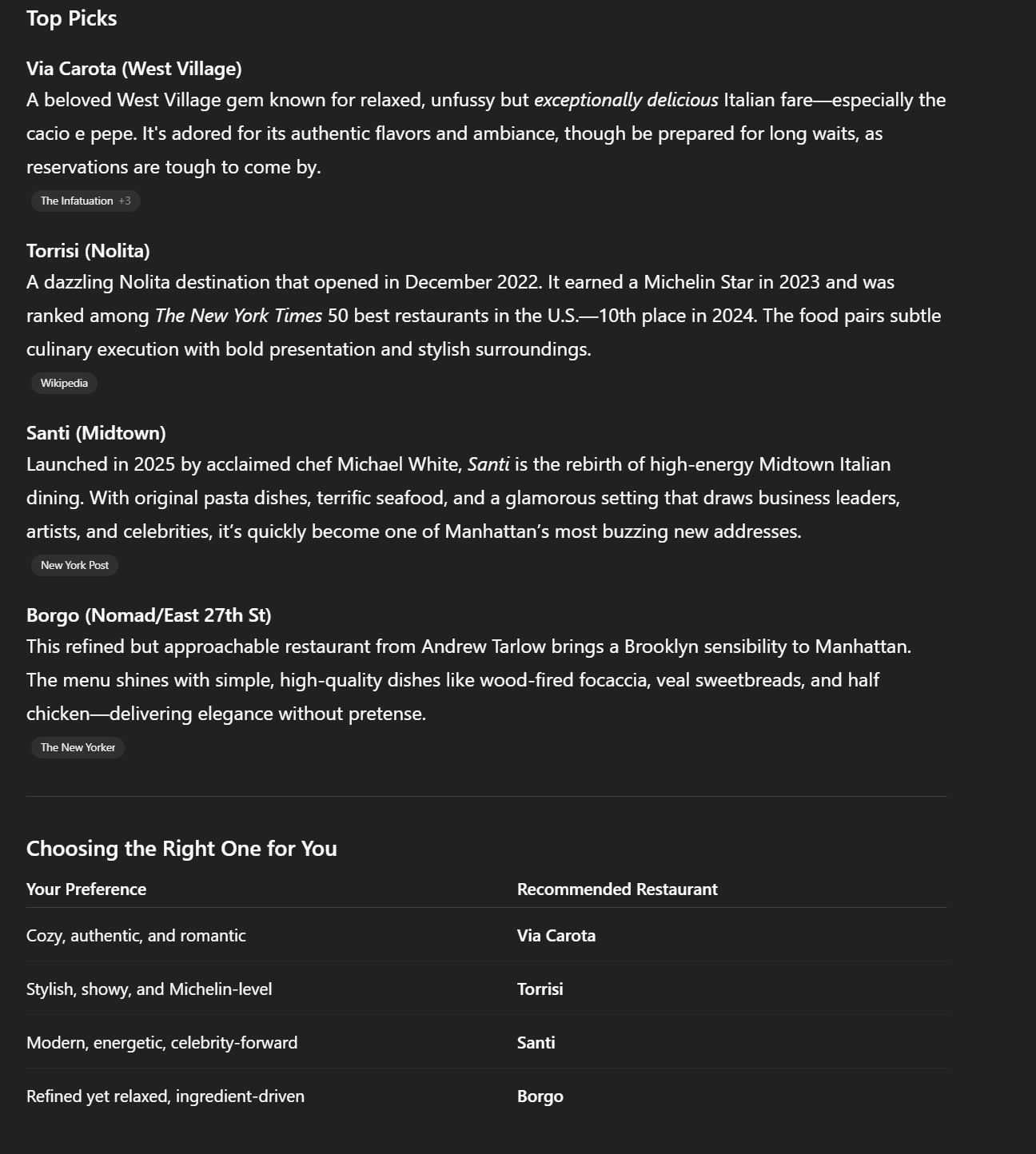

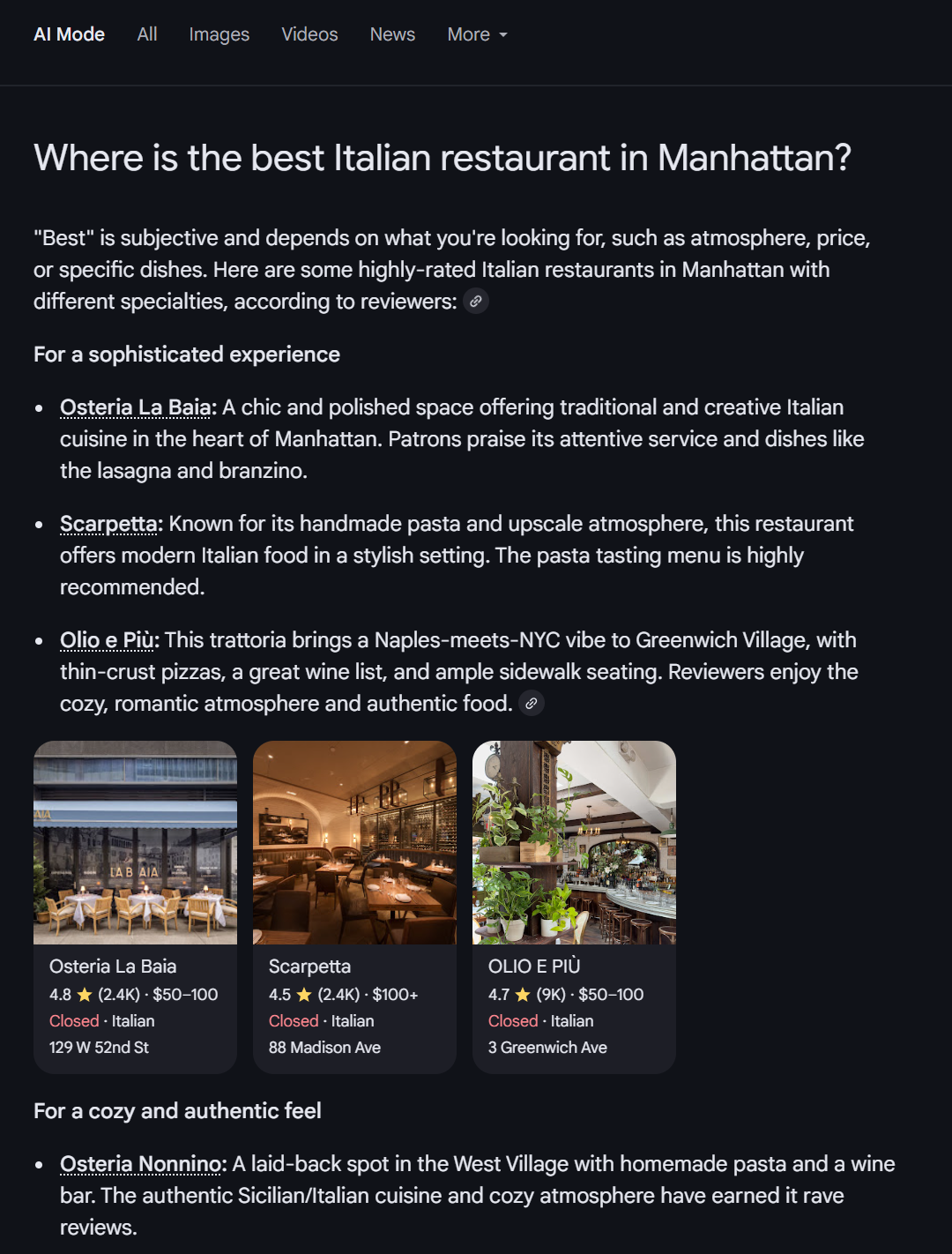

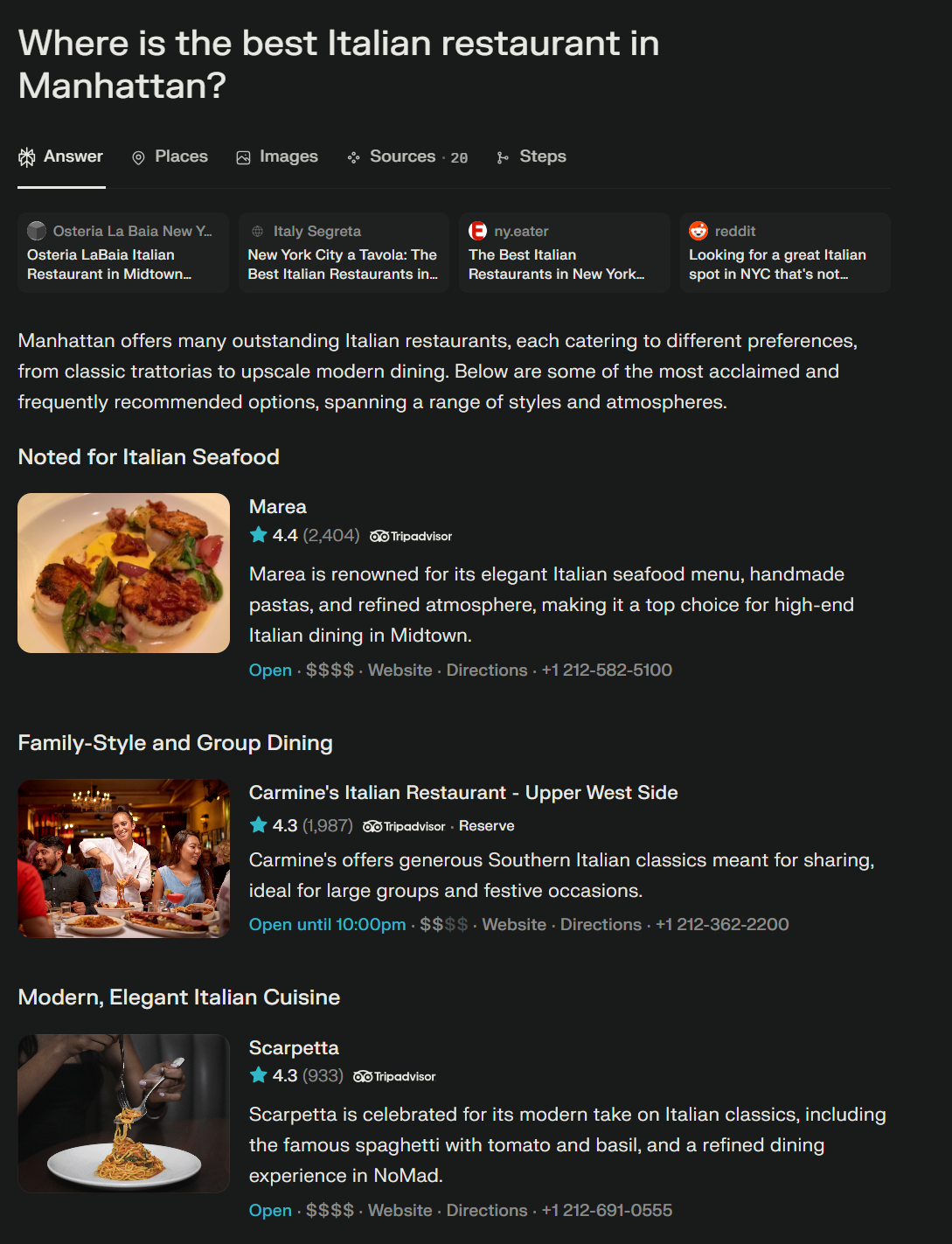

Query: Where is the best Italian restaurant in Manhattan?

ChatGPT’s response

Google AI Mode’s response

Perplexity’s response

Claude’s response

Gemini’s response

Copilot’s response

Here are some key insights from these queries:

- There is little overlap between recommendations. While certain generative engines had matching recommendations, there was no one restaurant that appeared in all of them. At most, the same restaurant appeared in 2–3 platforms’ responses, indicating that all these engines pull from different sources.

- AI Overviews didn’t trigger for this query. It could speak to how these BOFU-type searches are currently being held out from receiving AI Overviews.

- AI Mode, Perplexity, and ChatGPT took a local-listing style approach to their query. They delivered responses that include local business listings with important information like reviews, price, hours of operation, and more.

- ChatGPT and AI Mode were the only ones that triggered a map. The map showed the different locations of the recommended restaurant. In ChatGPT, if you clicked one of the map markers, it jumped you down to the recommended restaurants. For AI mode, it pulled up the Google Business Profile in the sidebar.

How you can take action based on this exploration

Now that you have an idea of how different generative engines respond to the same local query, what can you do to optimize your business’s presence in them? At the base of it, following local SEO best practices will turn out the best results.

Here are some especially important things to do:

Prioritize optimizing your local information

Optimizing your local business information is crucial for appearing in generative AI searches. If you’re trying to appear in AI Mode, Perplexity, or ChatGPT, it’s especially important since they present local listings to searchers.

But overall, local searches (no matter the engine) call for local information, so it’s important that your business’s local information is well established. This includes optimizing information like your:

- Hours of operation

- Location (or service areas)

- Cost

- Reviews

Keep this information up-to-date will help ensure these engines know your website is relevant to the local search, but also ensure your information is accurate if, and when, it appears.

💡 Bonus tip: Build up reviews on your profile. Reviews will help build trust with your brand, which can help you improve visibility in local and AI searches.

Diversify your local business appearances

None of the generative engines had exactly the same business listings, which means they’re likely pulling from different sources. That means you’ll want to diversify where your business appears across the web, so you’re more likely to get pulled into the search results.

So, build up your presence in local directories like:

- Google Business Profile

- Yelp

- TripAdvisor

You can also beef up your appearance in industry-niche directories and aggregator sites that pull “best of” lists.

Regardless of where you appear, make sure your information is accurate across the web, including your contact information, hours of operation, and services you provide. This ensures that, as you diversify your presence, everyone will get the same information regardless of where they find it (or where LLMs pull it from).

Overall insights from this generative AI response exploration

After looking at the different query types and seeing how different generative engines respond to those same queries, there are some overarching themes you can see throughout.

Here are some things to keep in mind, based on the results of this exploration:

- Formatting is important: Not only is the quality and value of your content important, but how you present it matters too. Delivering organized content will help generative engines better understand your content and how it’s relevant to the query, which can increase your chances of appearing in the results.

- Query wording can shift the results: Just a one-word differentiation can impact the information people get when conducting a query on a generative engine. It’s important to account for related query variation to ensure your business still shows up in those search results.

- Generative engines diversify their sources: Not all generative engines rely on the same sources to produce answers. With every query, there was slight variation and differences, indicating that certain engines pull information from elsewhere. Diversifying your presence across the web can help ensure you’re appearing in places where these engines pull information.

- User-first approach wins out: Anticipating what the user needs from a query will help you have a better chance of being pulled as a resource by any generative engine. Putting the user first to ensure a clear presentation of information that’s well organized will go a long way.

In conclusion, generative AI search is not a one-size-fits-all. Different engines prioritize different information, formats, and citations, meaning you need a multi-engine approach to your search strategy.

OmniSEO® is the multi-engine search strategy you need to win AI search

With search diversifying and generative engines producing variations of answers, you need a search strategy that’s going to help you stay on top of all of it. With OmniSEO®, you can track and monitor your performance across generative engines to see where you (and your competitors) appear across these engines.

Connect with us today to see how we can help you elevate your AI search game to drive real business growth!

Future-Proof Your SEO Strategy with OmniSEO®

Goodbye search engine optimization, hello search everywhere optimization.

Writers